UC Santa Cruz’s team of computer science and engineering (CSE) Ph.D. students came out victorious by a generous margin during the public benchmark phase of the first-ever Amazon Alexa Prize SimBot Challenge, far surpassing other teams in the university competition focused on advancing virtual assistant technology.

In this challenge, teams build robots that can execute real-world household tasks like making coffee and cleaning a room, continuously learning from their environment as they do so. Some more complex tasks, such as preparing breakfast, require the robots to perform commonsense reasoning, such as figuring out what to do when there is food in the microwave when it needs to be used. This technology could help bring personal assistants to the market aimed to significantly help older adults and people living with disabilities, as well as the general population.

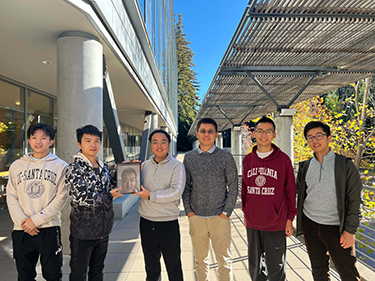

The UCSC team, called SlugJARVIS, is advised by Assistant Professor of CSE Xin (Eric) Wang and includes six CSE Ph.D. students who all study in Wang’s ERIC lab. Wang says the competition aligns perfectly with his research interests of embodied artificial intelligence (robots operating in 3D environments), natural language processing, and computer vision, and creates the opportunity to do novel and impactful work within these areas.

“I’m very proud of my students who are very up to the challenge,” Wang said. “They are excited about both the research outcomes and the cash prize in the end. This new challenge introduces many unique research problems in embodied AI, and we are eager to explore more along this line of work.”

The competition consists of two phases: the public benchmark phase, which began in January and ended Friday, April 29, and the live interaction phase. The team was selected to participate in this challenge in November 2021 after submitting a detailed project proposal, and was awarded a $250,000 grant and additional Amazon Web Services credits.

The SlugJARVIS team programmed their robot to be a helpful at-home robot that can handle a complex learning environment through three main actions. First, the robot breaks a task into manageable subgoals. Next, it creates a semantic map of its environment, keeping track of how the environment may be changing around it. Then, it uses this information to map the subgoals into a detailed plan of action to move through the space and interact with the objects.

During the public benchmark phase, the teams’ robot carried out tasks entirely in a virtual, simulated environment. The UCSC team’s robot has been able to successfully navigate tasks in unknown environments more than 15 percent of the time, while the closest competition has done so about 12 percent of the time.

Team SlugJARVIS will now advance to the live interaction phase of the competition and a chance to win the first-place $500 thousand cash prize. The team plans to travel to Seattle this summer to attend a bootcamp held by Amazon to kick off the second half of the competition.

During this second phase, the robot will interact and talk to a real human, introducing additional natural language processing challenges, as the robot needs to actively ask questions when it needs help and be able to correct course when a human makes a mistake. This means the team will need to add the capability of the robot to carry out dialogue – as of now it cannot speak.

“We spent a lot of time on this challenge and currently we are getting really great results,” said team leader and Ph.D. student Jing Gu. “Everything’s really exciting – we hope we can win.”