Campus News

Seismologists use deep learning to forecast earthquakes

A team of researchers at UC Santa Cruz and the Technical University of Munich created a new model that uses deep learning to forecast aftershocks.

For more than 30 years, the models that researchers and government agencies use to forecast earthquake aftershocks have remained largely unchanged. While these older models work well with limited data, they struggle with the huge seismology datasets that are now available.

To address this limitation, a team of researchers at the University of California, Santa Cruz and the Technical University of Munich created a new model that uses deep learning to forecast aftershocks: the Recurrent Earthquake foreCAST (RECAST). In a paper published today in Geophysical Research Letters, the scientists show how the deep learning model is more flexible and scalable than the earthquake forecasting models currently used.

The new model outperformed the current model, known as the Epidemic Type Aftershock Sequence (ETAS) model, for earthquake catalogs of about 10,000 events and greater.

“The ETAS model approach was designed for the observations that we had in the 80s and 90s when we were trying to build reliable forecasts based on very few observations,” said Kelian Dascher-Cousineau, the lead author of the paper who recently completed his PhD at UC Santa Cruz. “It’s a very different landscape today.” Now, with more sensitive equipment and larger data storage capabilities, earthquake catalogs are much larger and more detailed

“We’ve started to have million-earthquake catalogs, and the old model simply couldn’t handle that amount of data,” said Emily Brodsky, a professor of earth and planetary sciences at UC Santa Cruz and co-author on the paper. In fact, one of the main challenges of the study was not designing the new RECAST model itself but getting the older ETAS model to work on huge data sets in order to compare the two.

“The ETAS model is kind of brittle, and it has a lot of very subtle and finicky ways in which it can fail,” said Dascher-Cousineau. “So, we spent a lot of time making sure we weren’t messing up our benchmark compared to actual model development.”

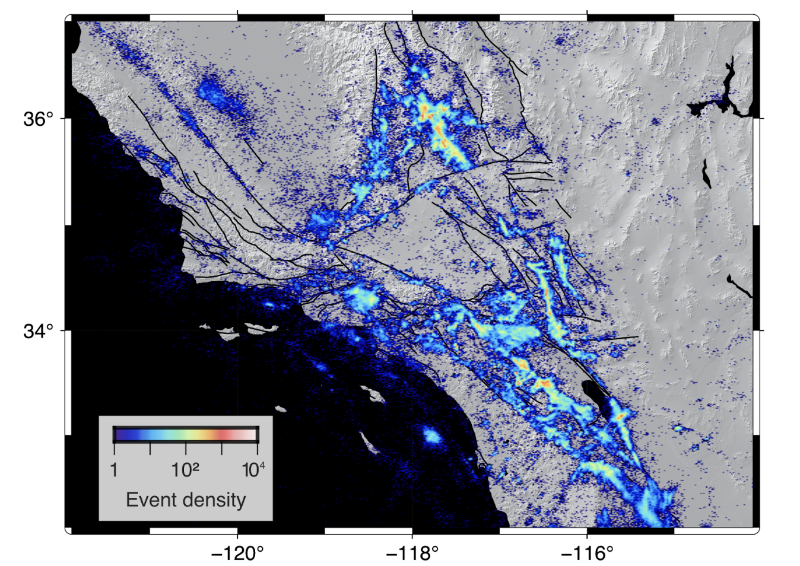

To continue applying deep learning models to aftershock forecasting, Dascher-Cousineau says the field needs a better system for benchmarking. In order to demonstrate the capabilities of the RECAST model, the group first used an ETAS model to simulate an earthquake catalog. After working with the synthetic data, the researchers tested the RECAST model using real data from the Southern California earthquake catalog.

They found that the RECAST model — which can, essentially, learn how to learn — performed slightly better than the ETAS model at forecasting aftershocks, particularly as the amount of data increased. The computational effort and time were also significantly better for larger catalogs.

This is not the first time scientists have tried using machine learning to forecast earthquakes, but until recently, the technology was not quite ready, said Dascher-Cousineau. New advances in machine learning make the RECAST model more accurate and easily adaptable to different earthquake catalogs.

The model’s flexibility could open up new possibilities for earthquake forecasting. With the ability to adapt to large amounts of new data, models that use deep learning could potentially incorporate information from multiple regions at once to make better forecasts about poorly studied areas.

“We might be able to train on New Zealand, Japan, California and have a model that’s actually quite good for forecasting somewhere where the data might not be as abundant,” said Dascher-Cousineau.

Using deep-learning models will also eventually allow researchers to expand the type of data they use to forecast seismicity.

“We’re recording ground motion all the time,” said Brodsky. “So the next level is to actually use all of that information, not worry about whether we’re calling it an earthquake or not an earthquake but to use everything.”

In the meantime, the researchers hope the model sparks discussions about the possibilities of the new technology.

“It has all of this potential associated with it,” said Dascher-Cousineau. “Because it is designed that way.”